R.to_sql('myTempTable', conn, if_exists='replace', index=False)Ĭur. N.to_sql('myTempTable', conn, if_exists='replace', index=False)Ĭur.execute("INSERT OR IGNORE INTO athName SELECT * FROM myTempTable")ĭ.to_sql('myTempTable', conn, if_exists='replace', index=False)Ĭur.execute("INSERT OR IGNORE INTO date SELECT * FROM myTempTable") #creates all the tables and loads them with the pertinent info, temporarily places the table in a temporary table then into its new tableĭf.to_sql('myTempTable', conn, if_exists='replace', index=False)Ĭur.execute('INSERT OR IGNORE INTO P圜harmData SELECT * FROM MyTempTable') Much easier than C++ or JavaScript This course teaches you.

Metrics_id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE, Its the 1 language for AI and machine learning, and the ideal language to learn for beginners. #pulls out the columns I need from main file and puts into a data frameĭf = rawdata]Īthlete_id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,ĭate_id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE, #date_id column is inserted into the file, id is given by the column Completion once once per date etc

#Pycharm sqlite browser full#

#athlete_id column is inserted into the file, id is given by the column Full name only once per name #this creates a new column in the original file and assigns an id based on specific criteria.

Im sure its not the best way to go about things but it worked! rawdata = (csv file) If anyone else comes along this thread and is in the same situation, I have worked out a solution. I would repeat 70 to 74 for each table created. Id INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT UNIQUE,Ĭur.execute('''INSERT OR IGNORE INTO AthName (name)Ĭur.execute('SELECT id FROM AthName WHERE name = ? ', (N, )) # 'RPE', 'Duration', 'Sleep', 'Fatigue', 'Stress', 'Soreness', 'Mood', # 'Start', 'Completion', 'Email', 'Name', 'First Name', 'Last Name', Im trying to avoid duplicates by using "ids" instead of having the data all in one table. I am having trouble creating the tables to store the data in SQLite. and PostgreSQL, or from SQLite, with its smaller footprint running either.

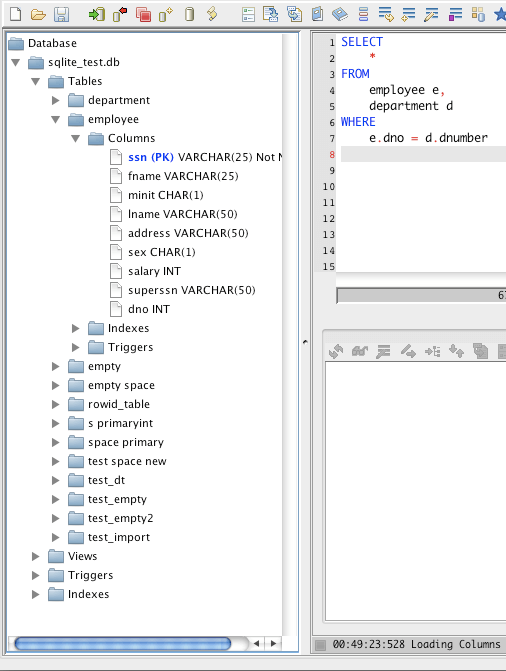

Excel -> CSV -> Python -> SQLite, then Python to retrieve data when needed. ensure that a given work either opens in multiple browsers or that a user is. Im hoping this is the right method but am open to suggestions. I am hoping to send the response to a database on SQLite so I can then use python to analyze and report. I am collecting daily data that goes to an excel/csv file. I am new to this domain and those are what I have learned on so far. I am using Python3, P圜harm and DB Browser for SQLite.

0 kommentar(er)

0 kommentar(er)